Enterprise Serverless 🚀 Databases

This section in the series on Enterprise Serverless specifically covers databases, and the planning, thoughts and gotchas. You can read the ideas behind the series by clicking on the ‘Series Introduction’ link below.

The series is split over the following sections:

- Series Introduction 🚀

- Tooling 🚀

- Architecture 🚀

- Databases 🚀

- Hints & Tips 🚀

- AWS Limits & Limitations 🚀

- Security 🚀

- Useful Resources 🚀

Databases

Which databases are serverless and why use them? 🤔

When designing and architecting serverless solutions on AWS you have two main options for databases; traditional (server based) and serverless. But the question is, what are the differences between the two, and how should that help with your decisions with which database to use?

When we talk about serverless databases we typically talk about DynamoDB or Serverless Aurora (v1 and v2); with the possible inclusion to that list of RDS Proxy as a facade for managing connections.

When we talk about non-serverless databases on AWS we typically think of AWS RDS (MySQL, Postgres, Aurora, SQL Server, Oracle etc), DocumentDB and Neptune.

The main benefits of using serverless databases are:

- Maintenance — less management overhead.

- Scalability — increased scalability and less need for capacity planning.

- Integrations — typically more native integrations with other services such as Lambda, Step Functions, API Gateway, AWS AppSync etc.

- Cost — pay for the capacity you use.

- Connection Management — there are certainly ways around this with lambda, but using DynamoDB typically negates the need to think about database connections when lambda scales out (more into this later..)

- Dedicated staff — no need for dedicated DBAs or experts to manage the databases 24/7.

When we take this a step further for the enterprise on AWS with fully serverless databases, this usually only leaves DynamoDB as an option in my opinion, as v1 of Aurora Serverless has its limitations with scale, and v2 is still only in preview:

“Amazon Aurora Serverless v1 is a simple, cost-effective option for infrequent, intermittent, or unpredictable workloads”. — AWS

Aurora Serverless v2 is now highly scalable, available, and covers the use cases of enterprises:

“Aurora Serverless v2 (Preview) supports all manner of database workloads, from development and test environments, websites, and applications that have infrequent, intermittent, or unpredictable workloads to the most demanding, business critical applications that require high scale and high availability.” — AWS

Why DynamoDB is King 👑

Within AWS itself they have a ‘NoSQL first approach’ for their internal services, as shown by this presentation by Colm MacCárthaigh (Sr Principal Engineer, AWS) below:

In my opinion this is why architects and serverless engineers should think about a ‘DynamoDB first approach’ when architecting domain services, although it may not fit all use cases obviously (read on below…)

So what is DynamoDB?

DynamoDB is a fully managed NoSQL key value pair and document database, which delivers single-digit millisecond performance at any scale. DynamoDB can handle more than 10 trillion requests per day and can support peaks of more than 20 million requests per second.

The following video is a great example of the performance and scale Disney+ achieved with the use of DynamoDB:

Does DynamoDB fit all use cases? 🤔

In my opinion, no (although I am sure Rick Houlihan would tell me otherwise.. and he is awesome!) For the most part as we split micro services into their own domains we find that DynamoDB will fit most use cases in my experience.

The reason I don’t believe it fits all use cases is that in the past I have worked on enterprise applications which required complex querying, aggregation, sorting and pagination all at the same time. This became a logistical nightmare for the development team, reduced developer satisfaction, increased cognitive load, and we favoured moving to another database technology due to the development overheads, lack of clarity on future requirements, and freedom of queries.

DynamoDB requires that you think of your data access patterns up front for the most part, which makes it less flexible than SQL based databases in my opinion. As solutions are typically split into multiple domain services with their own data stores this is typically less problematic than you would think.

There are certainly ways to work with this using single table designs, adjacent list patterns, aggregations and GSI overloading, although this does mean that architects and developers need to spend considerable time in detailing the access patterns up front — and need to play this off against the benefits you get from using DynamoDB in the longterm, and ultimately how quick you need to ship features.

In my opinion one of the best articles on single table design on DynamoDB is by AWS Data Hero Alex Debrie which can be found here, as well as his fantastic DynamoDB Guide which covers everything you would need to know!

This video is also simply magic — and all architects and developers should watch this in my opinion! I must have watched this twenty times over the years! 👊🏼

How do we work around these limitations? 🧑🏻💻

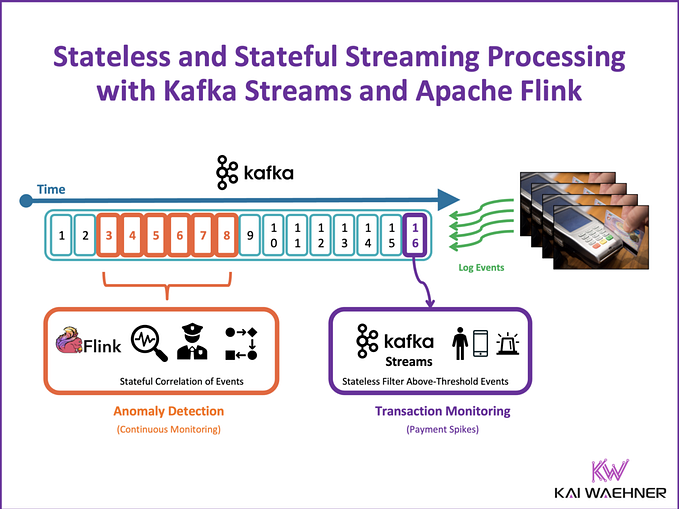

The great thing about DynamoDB is how extensible it is through the use of DynamoDB Streams, which allows architects and developers to stream data changes to other database technologies where required, gaining the major benefits of DynamoDB at scale, with the additional capabilities if/when required.

A great example of this is streaming data changes to AWS ElasticSearch to allow for fine grained search capabilities, or AWS Redshift for data warehousing queries and reporting. It is also possible to stream the data to Serverless Aurora which is a common pattern too when needed.

One thing to consider when using multiple database technologies and DynamoDB streams is the eventually consistent nature of the workloads. I worked on a major project where the front end allowed the user to add a new record in a form which went to DynamoDB, which then refreshed back to the main search view based on a different database technology, and the data had not replicated yet when the table view loaded and called the API (This can take 200–300ms typically yet the frontend refreshes immediately). This means an API first approach can be problematic when you’re needing to cache in the client.

Can Lambda work with RDS and DocumentDB? 🤔

The simple answer to this is yes! However, there are various things to consider, and more work involved in observability and maintenance with DocumentDB.

The most important thing to consider in my opinion is database connection management when lambda scales horizontally, and this is where you will spend time on observing requests and tweaking lambda reserved concurrency, whilst also needing to think about read replicas and instance sizes of your databases (as larger instances typically mean more available connections).

For AWS RDS we have the AWS RDS Proxy, which sits between your application and your relational database to efficiently manage connections to the database and improve scalability of the application. This means that the issues above are less prominent:

There is also a package created by Jeremy Daly a few years ago called serverless-mysql, however there doesn’t seem to be an equivalent for DocumentDB that I can find from the community. Yet!

Use Case with Document DB

On one major project I worked on we used DocumentDB, which had a finite amount of available database connections to use (including read replicas), and there is unfortunately no proxy available from AWS.

As the lambdas scaled out due to unpredictable workloads we needed a plan to manage this i.e. we needed to ensure that the amount of concurrent lambdas (and therefore connections) didn’t surpass the amount of overall available connections!

The first plan was simple, open and close the database connections in the lambda each time it was invoked as they were only taking around 200ms to run..simple! We quickly realised that opening and closing database connections at that rate was very expensive on compute whilst load testing, and the database hit 90% memory and high compute very quickly on only a relatively medium amount of concurrent lambdas per second…aghhh! 🔥🔥🔥

This essentially meant that we needed to look at each of the endpoints in turn and set a reserved concurrency on the individual lambdas, with the overall concurrency count being less than the overall count of database connections. This actually worked very well, and even at very high scale per second and leaving the connections open, we found that any zombie connections were gone after around 15 minutes approx, and this worked well. This gave us the benefits that we needed from migrating a workload to DocumentDB from MongoDB, as well as the benefits of the domain services being fully serverless on lambda apart from the database. 👏

When using Mongoose with NodeJS, lambda and DocumentDB, one consideration is that mongoose by default sets the pool size on the connection to 5, which means that the correlation between lambda and open connection is not 1! This can be changed very easily thankfully — so don’t get caught out!

Integrations and features 🛠

When architecting solutions it is always useful to know the integration points and features that the databases you choose can utilise. Below is a list of common DynamoDB integrations which can be massively useful in my experience.

AWS AppSync

AWS AppSync is a managed GraphQL service which has DynamoDB resolvers allowing you to directly access the database from the Graph API. This is a powerful pattern which scales massively and I have used extensively in the past:

API Gateway

In the same vain as AWS AppSync above, you can apply the same architecture patterns to REST with API Gateway with a service proxy integration. I have used this in the past on an enterprise project for a basic domain service which only allowed public reads, but needed to have potential high throughput and scale.

AWS Step Functions

AWS Step Functions is a managed orchestration service for workflows which has a direct integration with DynamoDB. This is very useful for managing records during your workflow invocation without the need to jump out to lambda.

DynamoDB Streams

As discussed above, DynamoDB Streams allows an integration point to lambda for the streaming of any items changes which opens up almost all use cases you can think of. Examples being writing to Elasticsearch, populating a data lake on S3, or alerting based on specific scenarios.

AWS Cognito item level authentication

By combining DynamoDB and AWS Cognito you can create fine grained access control at item level in the database. This is a powerful feature which is discussed further here.

DynamoDB Global Tables

DynamoDB has a feature named Global Tables which allows you to replicate data across regions, which opens up many possibilities, for example Active Active over different regions.

DynamoDB DAX

AWS DynamoDB Accelerator (DAX) is a fully managed in memory cache for DynamoDB which is highly available, and delivers up to 10X performance improvement. This performance can improve from milliseconds too microseconds, even at millions of requests per second.

Further considerations and check list 📋

Below is a set of questions that I often think about when choosing a database, and the planning and ongoing maintenance of it:

VPC or no VPC, that is the question?

When using DynamoDB one of the benefits is less configuration and no need for the complications around VPC’s, and the needs for Nat Gateways etc. If you are already in a VPC for business reasons then you may need to access DynamoDB via a VPC endpoint.

Blob storage

Whilst some databases allow for effective blob storage, with DynamoDB there is a max binary item size of 400kb. This means it is less suited for storing images or blobs, and typically works alongside AWS S3 to store a pointer to the file. This is also far cheaper since DynamoDB charges on reads and writes based on item size.

Local development

If your team is working locally on serverless development rather than in AWS directly you will need to think about local development strategies for databases. When using MySQL or equivalent this can be tackled very easily using Docker.

On the other hand if you are using DynamoDB and serverless you need to think of alternatives such as the following (which can also be ran using Docker):

1. serverless-dynamodb-local

2. localstack

3. serverless-localstack

Ephemeral environments

If your team is working with ephemeral environments and database technologies such as AWS Elasticsearch and DocumentDB, the likely hood is that you don’t want to provision a cluster per PR or developer! One way around this is to have one main cluster for QA and ephemeral environments, and manage the deployments and reuse through your serverless file.

Total cost of ownership

One of the key considerations in my opinion is TCO, which is covered in some parts by this article, as well as in this fantastic slide deck by Vadym Kazulkin and Christian Bannes.

Where are you storing your data, why, and for how long?

One key consideration is data privacy laws and compliance around explicitly where you are storing your data, the reasons for storing it, and for how long (think GDPR or equivalents).

Back up your data

Plan out database backup strategies regardless of the database that you choose, as this is something that in my experience some organisations forget about until it is too late due to the need of shipping features quickly.

DR strategies

Once you have your back ups planned, it is essential that there is a DR strategy in place, and that it is exercised at least once to ensure it is adequate for your organisation. This is a very useful AWS white-paper on the subject.

Security

One of the main considerations is security from both an access point of view (i.e. who has access to the data), and for serverless development with least privilege applied (how your data is accessed). You should also think about encryption at rest and in transit.

Data Access Layers

I personally think it is useful to wrap data access calls (Say the DynamoDB SDK calls) in a package, allowing for easier transition to a different underlying database technology at a later date if the proverbial hits the fan and you hit a blocker. No, they are unlikely to map one to one, but it will certainly be easier than starting from scratch in my opinion!

Migrating databases

Another key consideration is the migration of any existing databases, and the parity between your existing database features and your destination database. AWS has a managed service called AWS DMS for database migrations which allows for both homogeneous migrations as well as heterogeneous. Consider the amount of reusable code you potentially have and the complexities of the data transfer (and potential ETL) when choosing the right database migration path.

Gotchas 🥸

The following section describes some gotchas that I have hit in the past which may be useful to other architects.

AppSync item level authorisation

During a major project with AWS AppSync we decided to use it in conjunction with DynamoDB, and read the best practices of fine grained access control at item level using peopleCanRead, groupsCanRead, peopleCanModify etc. The DynamoDB schema was checked for validity with AWS during various calls, and we were good to go!

This was extremely effective, and at the start of the project meant that we used DynamoDB resolvers to remove the compute layer altogether (which was less expensive and very fast). As the project progressed we found that VTL couldn’t manage our newer, more complex features, and we jumped out to lambda (which at this point still worked well as we used the sub in the OAuth2 token to check what access the user should have and checked at item level still in DynamoDB). All good at this point with a bit of extra work..

Where things got interesting was that this worked great for implicit/authorization code flows where the consumer of the API was an authenticated user, but when needing to also accommodate a new machine to machine flow (client credentials grant where there is no user and it is API to API) we found that we couldn’t reuse the same lambdas now. The plan was to reuse the lambdas with API Gateway for M2M i.e. a separate handler but the main logic remaining the same, but in the end this meant that we essentially needed to duplicate a large amount of lambdas with the only differences being the database calls (the rest of the logic was duplicated).

The takeaway here is to a.) think about the future of the project integrations when using item level authorisation and b.) potentially adding middleware for the authorisation rather than being within the main logic.

DMS can’t do sets with DynamoDB

On another project I looked to migrate data to DynamoDB and hit a road block in finding out that AWS DMS can not do sets. Early POCs with database migrations are a good idea so you hit these kind of things early!

Wishlist! ❤️

I thought I would finish off this section with my personal wish list, which would contain two main items! Firstly Serverless Elasticsearch, and secondly Serverless DocumentDB. A DocumentDB proxy would also be a blessing! (A man can only dream….) 💭

Next section: Hints & Tips 🚀

Previous section: Architecture 🚀

Wrapping up

Lets connect on any of the following:

https://www.linkedin.com/in/lee-james-gilmore/

https://twitter.com/LeeJamesGilmore

If you found the articles inspiring or useful please feel free to support me with a virtual coffee https://www.buymeacoffee.com/leegilmore and either way lets connect and chat! ☕️

If you enjoyed the posts please follow my profile Lee James Gilmore for further posts/series, and don’t forget to connect and say Hi 👋

About me

“Hi, I’m Lee, an AWS certified technical architect and polyglot software engineer based in the UK, working as a Technical Cloud Architect and Serverless Lead, having worked primarily in full-stack JavaScript on AWS for the past 6 years.

I consider myself a serverless evangelist with a love of all things AWS, innovation, software architecture and technology.”

** The information provided are my own personal views and I accept no responsibility on the use of the information.