Serverless Caching Strategies — Part 4 (AppSync) 🚀

How to use serverless caching strategies within your solutions, with code examples and visuals, written in TypeScript and the CDK, and with associated code repository in GitHub. Part 4 covering caching in AWS AppSync.

Introduction

This is Part 4 of a number of articles covering serverless caching strategies on AWS, and why you should use them. The Github repo can be found here https://github.com/leegilmorecode/serverless-caching.

This part is going to cover caching within AWS AppSync.

🔵 Part 1 of this article covered caching at the API layer using Amazon API Gateway.

🔵 Part 2 of this article looks at caching at the database level using DynamoDB DAX.

🔵 Part 3 of this article looked at caching within the Lambda runtime environment itself.

🔵 This part will look at caching at the AppSync level.

🔵 Part 5 of this article will look at caching at the CDN level with CloudFront.

This article is sponsored by Sedai.io

Quick recap 👨🏫

The following image below shows some of the areas you are able to cache within Serverless solutions:

What are we building? 🏗️

As described in Part 1 of the series, this is what we are building out; and we are focusing on the area highlighted in pink within this article, specifically caching in AppSync:

Serverless Blog ✔️

The serverless blog has the following flow:

⚪ A CloudFront distribution caches the React website which has an S3 bucket as its origin. We can cache the web app at this level.

⚪ The React app utilises a GraphQL API for accessing its data using AWS AppSync. For certain endpoints we may look to utilise AppSync caching.

⚪ The AppSync API resolves to DynamoDB through Lambda for its data, and we are using DAX as a cache sitting in front of the database. We can utilise DAX to cache at the database level here.

AWS News Blog ✔️

The AWS News blog has the following flow:

⚪ A CloudFront distribution caches the React website which has an S3 bucket as its origin. We can cache the web app at this level.

⚪ The React app utilises a REST API for its data using Amazon API Gateway. We have caching here at the API level within API Gateway.

⚪ For cache misses we use a Lambda function to retrieve the data from a Serverless Aurora database. We can also cache certain data within the lambda itself in this scenario.

💡 Note: this is the minimal code and architecture to allow us to discuss key architecture points in the article, so this is not production ready and does not adhere to coding best practices. (For example no authentication on end points). I have also tried not to split out the code too much so example files are easy to view with all dependencies in one file.

Firstly, what is AppSync? 👨💻

💡 Note: If you have a good understanding of AppSync please feel free to skip to the next section

Organisations choose to build APIs with GraphQL because it helps them develop applications faster, by giving front-end developers the ability to query multiple databases, microservices, and APIs with a single GraphQL endpoint.

“AWS AppSync is a fully managed service that makes it easy to develop GraphQL APIs by handling the heavy lifting of securely connecting to data sources like AWS DynamoDB, Lambda, and more”

AWS AppSync is a fully managed service that makes it easy to develop GraphQL APIs by handling the heavy lifting of securely connecting to data sources like AWS DynamoDB, Lambda, and more. Adding caches to improve performance, subscriptions to support real-time updates, and client-side data stores that keep off-line clients in sync are just as easy. Once deployed, AWS AppSync automatically scales your GraphQL API execution engine up and down to meet API request volumes.” — https://aws.amazon.com/appsync/

The following video covers AppSync in more detail:

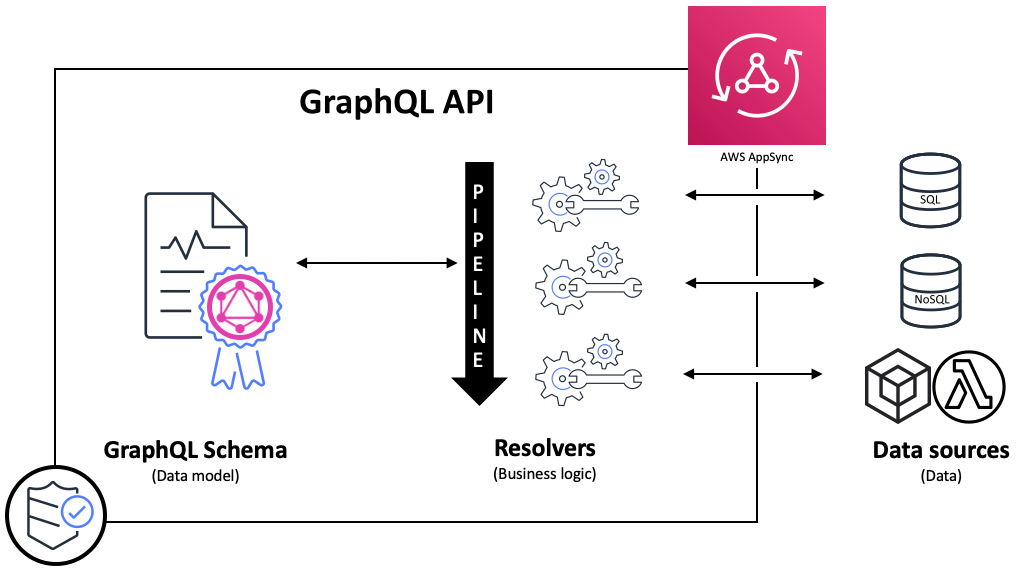

AWS AppSync has the notion of resolvers, which are built-in functions in GraphQL that “resolve” types or fields defined in the GraphQL schema with the data in the data sources. Resolvers in AppSync can be of two types: unit resolvers or pipeline resolvers.

“AppSync Pipeline Resolvers significantly simplify client-side application complexity and help enforce server-side business logic controls by orchestrating requests to multiple data sources. They can be used to enforce certain aspects of the application’s logic at the API layer.” — Ed Lima AWS

Pipeline resolvers offer the ability to serially execute operations against multiple data sources in single API call, triggered by queries, mutations, or subscriptions. This is shown in the diagram below:

How does caching work in AppSync? 💭

AWS AppSync’s server-side data caching capabilities backed by Amazon ElastiCache for Redis, makes data available in a high speed, in-memory cache, improving performance and decreasing latency. This reduces the need to directly access data sources, whether that be Lambda, DynamoDB, Serverless Aurora, HTTP or more.

“Caching is a strategy to improve the speed of query and data modification operations in your API while making less requests to data sources. With AppSync you can optionally provision a dedicated cache for your API’s unit resolvers, speeding up response time and taking load off backend services. Some customers have been able to leverage the simplicity and efficiency of server-side caching in AppSync to decrease database requests by 99%.” — Ed Lima — https://aws.amazon.com/blogs/mobile/appsync-pipeline-caching/

Caching within AppSync works at two levels:

🔵 Caching across the full API.

🔵 Caching per resolver level (inc pipeline resolvers).

Caching across the full API ✔️

If the data is not in the cache, it is retrieved from the data source and populates the cache until the time to live (TTL) expiration.

All subsequent requests to the API are returned from the cache. This means that data sources aren’t contacted directly unless the TTL expires. In this setting, we use the contents of the $context.arguments and $context.identity maps as caching keys.

💡 Note: The maximum TTL for AppSync is 3,600 seconds (1 hour), after which entries are automatically deleted.

Caching per resolver level ✔️

With this setting, each resolver must be explicitly opted in for it to cache responses.

💡 Note: This is important in our example as the other GraphQL endpoints don’t require caching in AppSync as we are explicitly caching at the database level i.e. DynamoDB DAX.

You can specify a TTL and caching keys on the resolver. Caching keys that you can specify are values from the $context.arguments, $context.source, and $context.identity maps.

The TTL value is mandatory, but the caching keys are optional. If you don't specify any caching keys, the defaults are the contents of the $context.arguments, $context.source, and $context.identity maps.

For example, we might use $context.arguments.id or $context.arguments.InputType.id, $context.source.id and $context.identity.sub, or $context.identity.claims.username. When you specify only a TTL and no caching keys, the behavior of the resolver is similar to the one above.

Lets have a look at setting per resolver caching below using the AWS CDK:

We can see from the simple code snippet above that we:

- Create the Lambda data source associated to the Lambda.

- We create a resolver for the data source above, setting the caching config to

$context.arguements.idwith a TTL of 30 seconds. (essentially caching all instances of a given blog by ID) - We finally add caching to the overall AppSync API, specifically per resolver caching. (This will create the cache and associate it with the API)

“With the expansion to pipeline resolver caching, customers who use full request caching immediately see the benefits of the cache changes as pipeline resolvers are included as part of full API caching”

How do we use caching keys? 🔑

When thinking about your caching keys, it is important to think about the context and way in which you want to cache. For example, you may in our example want to cache all blog posts regardless of who is accessing them. Or, you may want to cache blog posts depending on geographical region.

If this was something more specific to a particular user, say their latest messages from friends, then you would more than likely want to also utilise the $context.identity.sub too in your caching keys (i.e the identity of the particular user).

How do we invalidate the cache? 🗑️

When you set up AWS AppSync’s server-side caching, you can configure a maximum TTL. This value defines the amount of time that cached entries are stored in memory.

In situations where you must remove specific entries from your cache (say when an underlying entry has been updated or removed), you can use AWS AppSync’s evictFromApiCache extensions utility in your resolver's request or response mapping template. (For example, when your data in your data sources have changed, and your cache entry is now stale.)

An example of invalidating the cache in our scenario would be the following (the underlying updating of the item in DynamoDB removed for clarity):

The arguments for the extension method evictFromAPICache is the type, field, and caching keys.

You can add this code to your VTL templates in either the request or response mapping of your endpoint which is updating the resource.

If an entry is successfully cleared, the response contains an apiCacheEntriesDeleted value in the extensions object that shows how many entries were deleted:

"extensions": { "apiCacheEntriesDeleted": 1}Getting Started! ✔️

To get started, clone the following repo with the following git command:

git clone https://github.com/leegilmorecode/serverless-cachingThis will pull down the example code to your local machine.

Deploying the solution! 👨💻

🛑 Note: Running the following commands will incur charges on your AWS accounts, and some services are not in free tier.

In the ‘serverless-blog’ folder of the repo run the following command to install all of the dependencies:

npm iOnce you have done this, run the following command to deploy the solution:

npm run deploy🛑 Note: Remember to tear down the stack when you are finished so you won’t continue to be charged, by using ‘npm run remove’.

💡 Note: We use a CustomResource as part of the deploy to create the blogs table and populate it with some dummy data, so you can use it straight away.

Testing the solution 🎯

We now have the following architecture in place for this AppSync API with regards to caching, with the key points of interest highlighted in pink circles below:

Let’s have a look at testing the caching to see it in action

Using the console we can perform the following query on getBlogNoDax 12 times in a short period:

We can then see in CloudWatch that we have 1 cache miss (the initial call) and 11 cache hits (subsequent calls when the cache was populated, which are noticeably far far quicker). These calls also did not hit DynamoDB so we save on read capacity costs.

We can then go a step further and look at the ServiceLens Map from X-Ray to see what this looks like with regards to caching and latency:

We can see that the latency from the cache once populated is:

Latency (avg): <1ms

Requests: 1.63/min

Faults: 0.00/min

And latency on the initial cache miss (Lambda) is:

Latency (avg): 567ms

Requests: 0.13/min

Faults: 0.00/min

Testing cache invalidation

We will next look at testing the cache invalidation.

As the cache is still populated from our earlier queries to getBlogNoDax, we are able to then clear the cache based on updating the blog post (as shown below) with our mutation updateBlog:

We can see based on the return values from the mutation that the property ‘apiCacheEntriesDeleted’ has come back in the response with the value 1. This indicates that following our update we have invalidated the cache for this particular blog post.

If you do two updates in a row you will see that you only get this property returned on the first call, as there is nothing in the cache when you call the mutation a second time.

Let’s finally look at the code which actually performs the cache invalidation following a successful update of a blog post:

You can see that our request mapping template is performing a putItem in DynamoDB using the input passed into the mutation, and our response mapping template is then clearing the query cache for the getBlogNoDax type with the same key (i.e. blog ID), and finally returning the result to the consumer.

What are the advantages and disadvantages? 📝

So now that we have covered how we can cache within AppSync; what are the advantages and disadvantages?

Advantages ✔️

🔵 Improve performance and greatly reduce latency for end users.

🔵 We don’t need to manage the underlying cache instances (i.e. installing patches etc).

🔵 We could potentially reduce the costs of accessing downstream services such as DynamoDB dramatically (by as much as 99%)

🔵 We could potentially reduce the load on legacy downstream services.

Disadvantages ❌

🔵 Although we don’t need to manage the instance, we are still limiting the scalability of our Serverless solutions with the introduction of non-serverless services.

🔵 There is a cost associated with the underlying cache instances which could become very expensive depending on your use case and throughput. Note, the instances go from small 1 vCPU, 1.5 GiB RAM, to 12xlarge 48 vCPU, 317.77 GiB RAM, 10 Gigabit network performance, depending on your specific requirements.

Wrapping up 👋

I hope you found that useful!

Please go and subscribe on my YouTube channel for similar content!

I would love to connect with you also on any of the following:

https://www.linkedin.com/in/lee-james-gilmore/

https://twitter.com/LeeJamesGilmore

If you found the articles inspiring or useful please feel free to support me with a virtual coffee https://www.buymeacoffee.com/leegilmore and either way lets connect and chat! ☕️

If you enjoyed the posts please follow my profile Lee James Gilmore for further posts/series, and don’t forget to connect and say Hi 👋

Please also use the ‘clap’ feature at the bottom of the post if you enjoyed it! (You can clap more than once!!)

If you enjoyed this article you may like the following:

About me

“Hi, I’m Lee, an AWS Community Builder, Blogger, AWS certified cloud architect and Principal Software Engineer based in the UK; currently working as a Technical Cloud Architect and Principal Serverless Developer, having worked primarily in full-stack JavaScript on AWS for the past 5 years.

I consider myself a serverless evangelist with a love of all things AWS, innovation, software architecture and technology.”

** The information provided are my own personal views and I accept no responsibility on the use of the information. **